I am a Ph.D. student at the University of Hong Kong (HKU-MMLab) (Sep. 2025 - .), where Prof. Xihui Liu is my advisor.

Previously, I was a researcher working on generalist robotics with Dr. Tao Kong at ByteDance Research (Seed-Robotics) (Jul. 2024 - Aug. 2025). I received my Master’s degree from Fudan University (Sep. 2021 - Jun. 2024), where Prof. Tao Chen is my advisor. I am fortunate to work closely with Dr. Hongyuan Zhu from A*STAR, Singapore, and Dr. Gang Yu, Dr. Xin Chen, and Dr. Chi Zhang from Tencent. Before this, I obtained my Bachelor’s degree also from Fudan University (Sep. 2017 - Jun. 2021).

I am broadly interested in scalable training methods. My long-term research goal is to develop scalable, robust and generalized multi-modality systems that can understand, generate, and interact with the physical world.

📣 I am starting a new phase, and I am actively looking for positions for research intern!

🔥 News

- Jul. 2025. 🤖🤖 We release our latest Vision-Language-Action model, GR-3[demos]!

- Apr. 2025. 🎉🎉 We release OmniSVG

, a family of VLMs that generate SVGs. Now accepted to NeurIPS 2025!

- Sep. 2024. 🎉🎉 Two papers accepted to NeurIPS 2024, one focuses on foundational 3D generative models (MeshXL

), and another one explores Mamba architecture for 3D detection (3DET-Mamba).

- Jul. 2024. 🎉🎉 I joined ByteDance Research as a full-time researcher, working on generalist robotics.

- May. 2024. 🎉🎉 We release MeshXL

, a family of generative 3D foundation models for 3D meshes.

- May. 2024. 🎉🎉 I successfully defended my master’s thesis! [defense slides]

- Apr. 2024. 🎉🎉 Our state-of-the-art 3D dense captioning method Vote2Cap-DETR++

, is accepted to T-PAMI 2024.

- Feb. 2024. 🎉🎉 Our Large Language 3D Assistant, LL3DA

, is accepted to CVPR 2024.

- Jan. 2024. 🐧🐧 I joined Tencent as a research intern, working on 3D generation.

- Oct. 2023. 🥇🥇 Win the Scan2Cap Challenge at ICCV 2023.

- Feb. 2023. 🎉🎉 Our Vote2Cap-DETR

is accepted to CVPR 2023.

📝 Selected Publications

My previous research mainly focuses on building language models that can understand (Vote2Cap-DETR, LL3DA), generate (MeshXL, OmniSVG), and interact (GR-3) with the physical world. Please find my full publication list at google scholar.

GR-3 Technical Report

Tech Report | Alphabetical Order

Chilam Cheang, Sijin Chen, Zhongren Cui, Yingdong Hu, Liqun Huang, Tao Kong, Hang Li, Yifeng Li, Yuxiao Liu, Xiao Ma, Hao Niu, Wenxuan Ou, Wanli Peng, Zeyu Ren, Haixin Shi, Jiawen Tian, Hongtao Wu, Xin Xiao, Yuyang Xiao, Jiafeng Xu, Yichu Yang

- GR-3 follows instructions and generalizes to novel objects and concepts.

- We can adapt GR-3 to novel settings with few-shot human trajectories.

- GR-3 is able to proceed long-horizon and dexterous manipulation tasks.

OmniSVG: A Unified Scalable Vector Graphics Generation Model

NeurIPS 2025 |

Yiying Yang$^\star$, Wei Cheng$^\star$, Sijin Chen, Xianfang Zeng, Jiaxu Zhang, Liao Wang, Gang Yu, Xinjun Ma, Yu-Gang Jiang

project | arXiv | github | huggingface

- OmniSVG progressively generates SVGs spanning from simple icons to intricate anime characters.

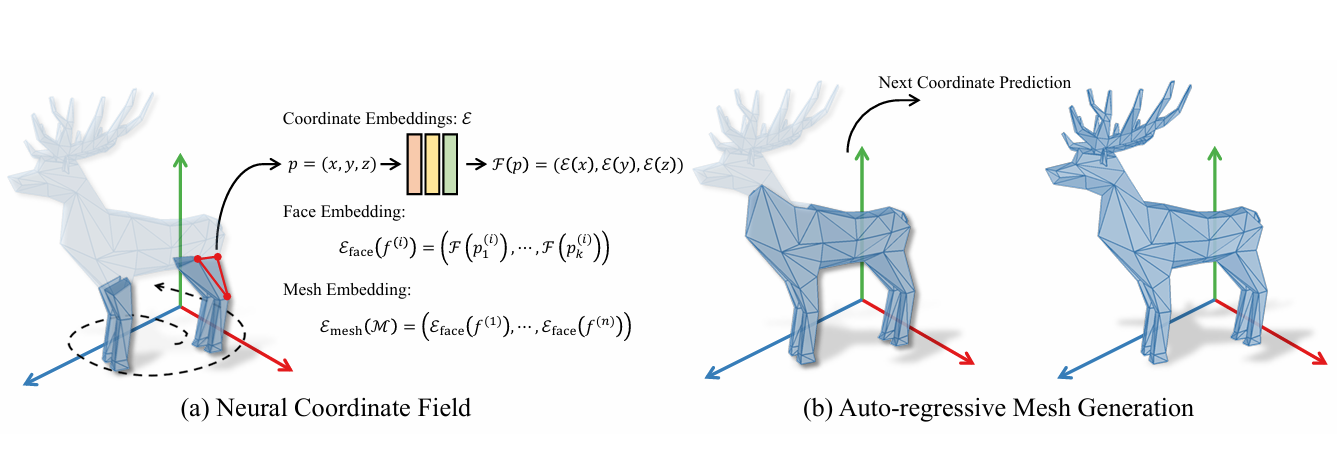

MeshXL: Neural Coordinate Field for Generative 3D Foundation Models

NeurIPS 2024 |

Sijin Chen, Xin Chen$^{\dagger}$, Anqi Pang, Xianfang Zeng, Wei Cheng, Yijun Fu, Fukun Yin, Yanru Wang, Zhibin Wang, Chi Zhang, Jingyi Yu, Gang Yu, Bin Fu, Tao Chen$^{\ddagger}$

- MeshXL turns a 3D mesh into one unique coordinate sequence, facilitating an end-to-end training pipeline for large-scale 3D mesh data.

- 🎉 Please also see our MeshAnything

, a model that mimics human artists in extracting meshes from any 3D representation, which is accepted to ICLR 2025

LL3DA: Visual Interactive Instruction Tuning for Omni-3D Understanding, Reasoning, and Planning

CVPR 2024 |

Sijin Chen, Xin Chen$^{\dagger}$, Chi Zhang, Mingsheng Li, Gang Yu, Hao Fei, Hongyuan Zhu, Jiayuan Fan, Tao Chen$^{\ddagger}$

paper | project | arXiv | github | youtube

- Propose a Large Language 3D Assistant that responds to both visual interactions and textual instructions in complex 3D environments.

- 🎉 Please also see our M3DBench

, a dataset querying 3D language models with multi-modal prompts. Now accepted to ECCV 2024.

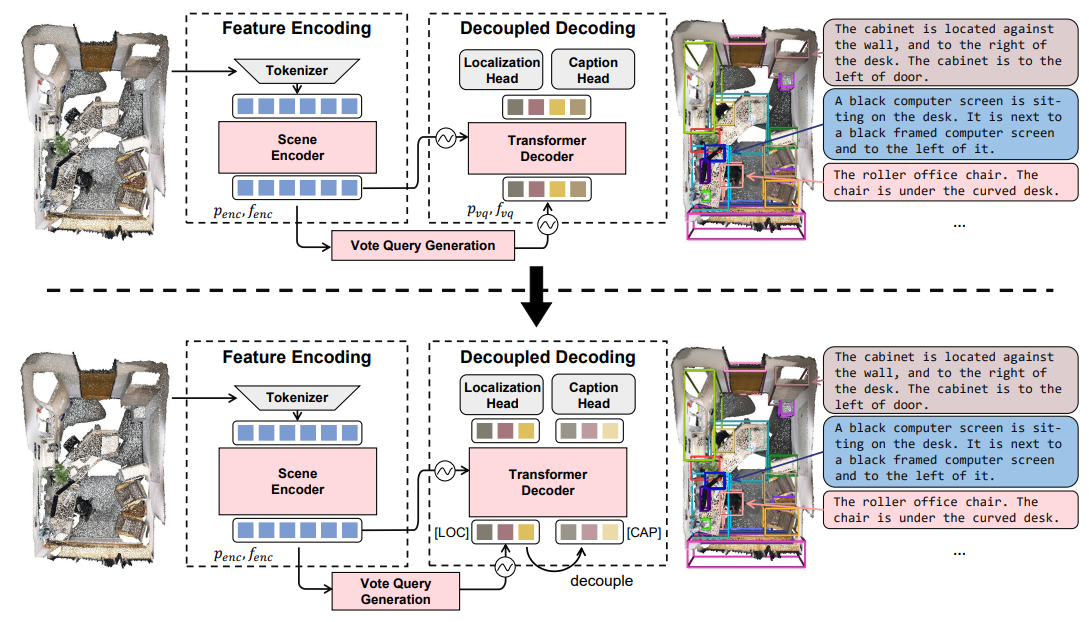

Vote2Cap-DETR++: Decoupling Localization and Describing for End-to-End 3D Dense Captioning

T-PAMI 2024 |

Sijin Chen, Hongyuan Zhu, Mingsheng Li, Xin Chen, Peng Guo, Yinjie Lei, Gang Yu, Taihao Li, Tao Chen$^{\dagger}$

- Decoupled localization and captioning feature extraction with parallel task decoding for 3D Dense Captioning.

- 🥇 Winner of the Scan2Cap Challenge in the 3rd Language for 3D Scene Workshop at ICCV 2023. [talk]

- My preliminary paper, Vote2Cap-DETR, is accepted to CVPR 2023.

🥇 Awards and Scholarships

- Apr. 2025. Spot Bonus at ByteDance AI-Lab-Research (Breakthrough in new fields, 2025-Q1), comes with a trophie😮.

- Apr. 2024. Award for Outstanding Graduate Student (rank 1/24).

- Oct. 2023. 1st place of the Scan2Cap Challenge in the 3rd Language for 3D Scene Workshop at ICCV 2023.

- Sep. 2023. National Scholarship (rank 1/46).

- Sep. 2022. 2nd prize of the Scholarship for Outstanding Students of Master’s Degrees.

- Sep. 2021. Award for the Scholarship for Outstanding Students of Master’s Degrees.

- Jun. 2021. 2nd prize of the Scholarship for Outstanding Students.

📖 Educations

- Sep. 2025 - .. Ph.D. student at the University of Hong Kong.

- Sep. 2021 - Jun. 2024. Master student at Fudan University.

- Sep. 2017 - Jun. 2021. Bachelor student at Fudan University.

💬 Oral Presentations

-

Jul. 2024. “MeshXL: Neural Coordinate Field for Generative 3D Foundation Models”. MeshXL paves the way for scaling up training on large-scale 3D mesh data. Our mesh representation turns a 3D mesh into one unique coordinate sequence, which enables us to simplify our architecture design into a decoder-only transformer model, facilitating an end-to-end training pipeline for large-scale 3D mesh data. A technical report at miHoYo.

-

Oct. 2023. “Vote2Cap-DETR: A Set-to-Set Perspective Towards 3D Dense Captioning”. By treating 3D Dense Captioning as a translation task from a set of object queries into a set of ``box-caption’’ pairs, we present a set-to-set perspective towards 3D Dense Captioning. A winner presentation for the Scan2Cap challenge at ICCV 2023. [talk | slides]

-

Jun. 2023. “End-to-End 3D Dense Captioning with Vote2Cap-DETR”. We present an end-to-end transformer model for localizing and describing objects in parallel within diverse 3D environments. A paper presentation at VALSE 2023, Wuxi, China.